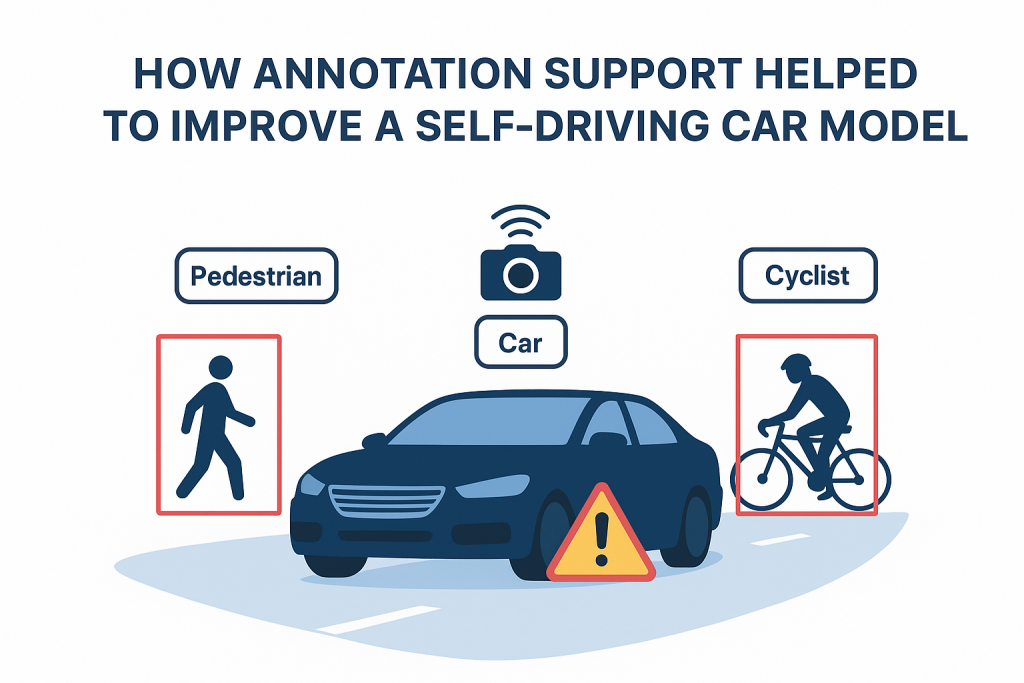

How Annotation Support Helped to Improve a Self-Driving Car Model?

Introduction Self-driving vehicles are designs that combine the forces, such as AI models, which are trained to understand the world in the same way that a human does, i.e. recognising roads, cars, pedestrians, traffic signs, etc. in real time. Highly labelled data sets are the main determinant in creating models that can be accurate. Here know how a poorly performing autonomous driving system turned into a safety, more reliable system through professional annotation services of Annotation Support. 1. The Challenge An autonomous vehicle company faced: What ails the fundamental dilemma? Improper and dissimilar data labelling of a previous outsourced company. 2. Project Goals Annotation Support allocated the following techniques: 3. Annotation Techniques Used by Annotation Support Bounding Boxes & Polygons – cars, trucks, buses, pedestrians and cyclists Semantic Segmentation – Pixel Level label of roads, sidewalks, curbs, lanes lines LiDAR 3D Point Cloud Annotation depth / distance – LiDAR labelling Keypoint Annotation – Wheel locations, headlight locations, locations of joints of pedestrians to make predictions of moving direction Occlusion & Truncation Labels -Marking the truncated or occluded objects of the detection training 4. Quality Control Measures 5. Results One quarter-year later, having been re-annotated, and the data set scaled up: 6. Learning Key Points Conclusion Annotation Support does not only deliver labeled data–clean, consistent, context-aware annotations were directly contributed to better results in the AI judgment. In autonomous driving, the quality of the data obtained about perception may mean the difference between a near miss and accidents. With high-quality annotations, the self-driving car model became safer, faster, and more reliable—bringing it one step closer to real-world deployment.