Top Annotation Techniques Used in Autonomous Vehicle Datasets

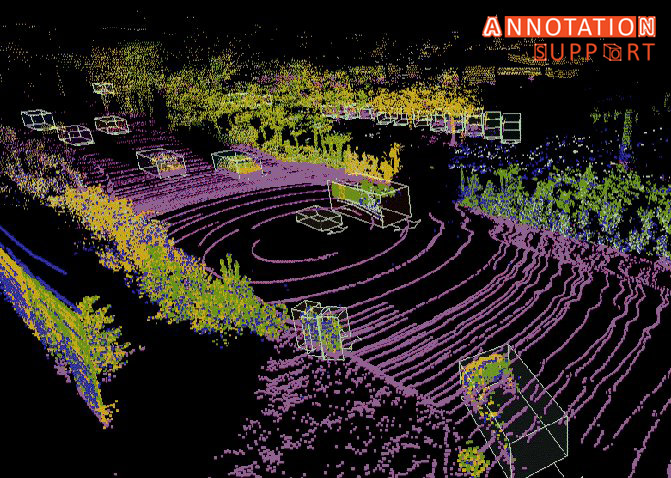

Autonomous vehicles rely heavily on high-quality annotated data to interpret the world around them. From understanding traffic signs to detecting pedestrians, the success of these vehicles hinges on the precision of data labelling. To train these systems effectively, several annotation techniques are used to handle the wide range of data types collected from cameras, LiDAR, radar, and other sensors. Below are the top annotation techniques commonly used in autonomous vehicle datasets: 1. 2D Bounding Boxes Purpose: To find out and place objects (like vehicles, pedestrians, road signs) in 2D square video. How it Works: In the camera images, box shaped figures encircle objects of interest in the form of rectangles. A label is placed on each box (e.g. car, bicycle, stop sign). Use Cases: 2. 3D Bounding boxes Purpose: To sense the space and the position of the objects in the 3D space. How it Works: In 3D point cloud dataset (typically LiDAR) cuboids are labelled to indicate a 3D object (depth, height, width, and rotation). Use Cases: 3. Semantic Segmentation Purpose: To label each pixel (2D) or point (3D) in a point cloud or image, to a class. How it Works: The pixels of an image are classified based on the object which they are attached to (e.g. road, sidewalk, walking person). Use Cases: 4. Instance Segmentation Purpose: To recognize individual objects and boundaries, even when it comes to objects belonging to the same class. How it Works: Intertwines object detection with a semantic segmentation model to mark every object instance in different manners. Use Cases: 5. Keypoint Annotation Purpose: Indicate certain important locations on items (e.g. at joints of people, corners of traffic signs). How it Works: Keypoints where used are tagged at the important parts of the body such as elbows, knees, wheels of a vehicle or head lamps among others. Use Cases: 6. Lane Annotation Purpose: To precisely identify and mark lanes and lane divisions during the process of driving. How it Works: In detected lanes in images, curves or lines are drawn on top of these lanes. Commonly lines are drawn on top of these lanes via polynomial fitting of curved roads. Use Cases: 7. Cuboid Annotation for Sensor Fusion Purpose: To combine 2D and 3D annotations to improve accuracy through several sensors (camera + LiDAR). How it Works: LiDAR 3D annotations are projected to obtain refinements on 2D camera images with multiple sensor inputs. Use Cases: 8. Polygon Annotation Purpose: To label the objects that have odd shapes and sharp edges. How it Works: The polygons will be a draw around the contours of the objects instead of the bounding boxes, a rectangle. Use Cases: 9. Trajectory Annotation Purpose: To trace the motion-trajectories of dynamic objects between frames. How it Works: The positions of objects are tagged throughout the period to comprehend the velocity, direction and motion in future. Use Cases: Conclusion Proper labelling is the mainframe of the development of autonomous vehicles. All the methods of annotations have their own use, either it is to identify a pedestrian in a crosswalk, or a drivable route in front. With the world moving towards completely autonomous industries, these methods of annotations keep getting more accurate, quicker and scalable with AI-aided tools and with the assistance of human-in-the-loop frameworks. It is not only the training of a car but actually the training of a machine to comprehend the complications of the real world in driving.